Stakeholder-Driven Evaluation

Motivation

Learning technology supporting informal learning at the workplace is designed to be used anytime and anywhere. Further, the less predictable and hidden nature of informal learning in addition to the large and less predictable number of application scenarios challenge the evaluation of learning technology. This is particularly the case if user centered design approaches already involved users in large numbers and to a large extent, setting a high level of end user expectations. These challenges are exacerbated in inter-organizational settings in which learning technology is intended to support complex arrangements of working and learning practices.

This article suggests an approach for the participatory evaluation of scaling technology enhanced learning in networks of organizations and gives an overview of the Layers application of this approach and the findings received. The article also references documents that contain further details on the Layers evaluation.

Theoretical Basis

The central question is how to organize the evaluation of learning technology to deal with these challenges and to successfully show the scaling of learning technology. We dealt with these challenges and developed an evaluation approach which is particularly suitable for large scale evaluations of learning technology used in workplace settings involving large groups of users. We suggest to employ a richer set of data sources and data collection activities suited to explore the often hidden nature of informal learning and which can be applied in workplace settings [1]. Our suggested approach is based on the idea of an active involvement and a pluralism of evaluation methods for evaluating learning technology [1]. Participation by stakeholders can occur at any stage of the evaluation process: in its design, in data collection, in analysis, in reporting and in managing the study [2]. So, large numbers of stakeholders can be involved in the evaluation, ranging from strong supporters of the introduced learning technology, to skeptical stakeholders.

The purpose of the participatory evaluation approach is to focus on learning and to draw lessons learnt, which could guide future decisions [3]. The goal is to gain insights into what changes in practices are associated with the deployment of learning technology in workplace settings (also across organizational boundaries, for example including the knowledge exchange networks of the participants) and to investigate diverse interests of users, of designers, developers and researchers, e.g., towards theory-building, design and sustainability. The participatory evaluation approach is a joint, collaborative stakeholder approach with “local people”, project staff, and collaborating groups [3][2]. Hence the goal is to include not just the end-users, but also developers, educational institutions, networks, associations and other organisations in the evaluation process in order to achieve a collaborative learning experience.

Therefore, we propose a representative type of participation and give the stakeholders a voice i.e. stakeholder involvement in planning the evaluation, to ask them to comment on findings, to identify the lessons learned and to determine the appropriate steps [2]. The approach aims to create a shared understanding and therefore to enhance the overall quality of the evaluation. Therefore, the participatory approach should use mixed methods and thus increase interaction, flexibility and exploration of the evaluated artefacts using for example semi-structured qualitative methods like informal conversations, observations or workshops [3][2]. Furthermore, this flexible approach allows the changing of the number, roles and skills of stakeholders as well as the external environment over the course of time [3]. The details of our evaluation approach, along the phases preparation, data collection, data analysis and interpretation, as well as a discussion of contributions, implications and limitations can be found in [4].

Method

We developed and applied a method that comprises three phases:

(1) Preparation: First, we held a workshop involving members from all stakeholder groups such as designers, developers, end user interactors or researchers from each context. Within the workshop we identified the most important stakeholders, the major focus areas of the evaluation as well as measurable evaluation criteria. Within a focus group with the end users we fine tuned the evaluation criteria and the evaluation procedure.

(2) Data collection: We used a mixed methods approach combining traditional methods such as interviews and more informal data collection methods such as regular accompanying talks or diaries. The ultimate goal was to continuously incorporate stakeholder feedback and additionally jointly reflect with them what was found. For this reason, accompanying conversations on a regular basis (i.e. weekly) and a continuous analysis of log data were performed. In addition to the continuous data collection, three evaluation workshops were held: First, a kick-off workshop to introduce the learning technology and to assess the status quo; second, an intermediate workshop to discuss about perceived changes and also about possible required adaptations of the learning technology; third, a final workshop to discuss about perceived changes and about changes and effects caused by a future and long term usage.

(3) Data analysis: Data analysis was performed in a collaborative manner for each instance and also across the instances. As feedback should be given on a regular basis to the end users, a data analysis parallel to the data collection activities is required. The interpretation of log data for each tool component was under responsibility of the respective developers. The ad-hoc interpretation of accompanying conversations and other informal interactions was performed by those members of the evaluation team that had conducted the end user interactions. To synchronize all these ongoing data analysis activities, regular coordination meetings involving members from the evaluation and design teams were held to jointly reflect and interpret the data collected and fine tune the next iterations of data collection.

Evaluation Approach Applied in Layers

This section demonstrates the applicability of our proposed approach using the Layers evaluation as a complex real case in which our evaluation approach was applied. In the following, we describe the context and procedure of the application of this approach in two settings, a healthcare and a construction setting, and illustrate findings that can be obtained by applying such an approach.

Healthcare Evaluation Context and Procedure

The evaluation report on the healthcare sector presents sample, procedure, methods and findings of the summative evaluation in the healthcare pilots in year 4 (see [5]). The evaluation approach in the healthcare sector involved 44 healthcare professionals that work in cross-organizational settings. The professionals adopted and used the Layers tools in real work settings over a period of five months. During the evaluation approach, we performed a number of activities that allowed us to receive instant feedback, reflections and recommendations with respect to the Layers tools.

Healthcare - Findings

The findings cover four core perspectives on the evaluation data: (a) potential, actual and anticipated uses, (b) design patterns, (c) challenges and (d) learning and working practices. With respect to (a), the health care professionals identified numerous uses of the Layers tools that could support and enhance informal learning at the workplace. Furthermore and with respect to (b), a set of design patterns and principles already identified during the co-design process in Year 3 could be corroborated with our findings. Additionally by referring to (c), we identified challenges that cover tool-level, user-level and group-level challenges. Last but not least with respect to (d) and by combining the aforementioned three perspectives, we report changes to learning and working practices related to the Layers tools.

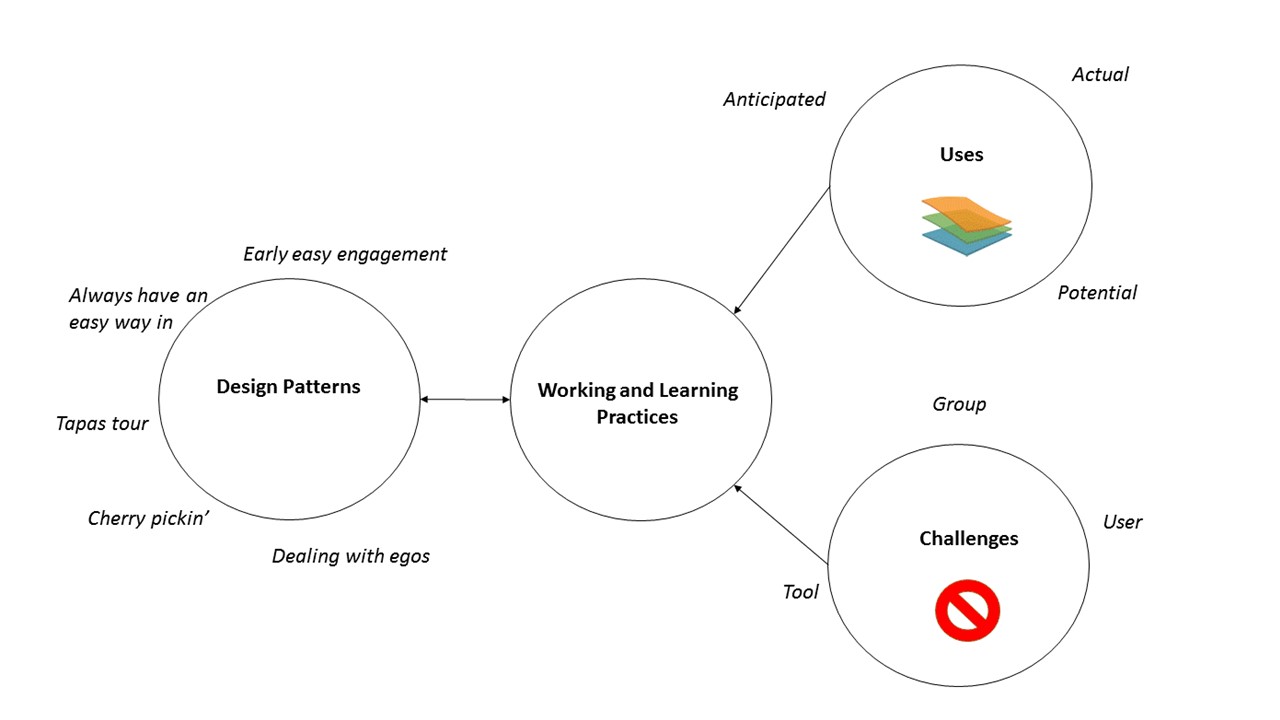

Figure 1 summarizes the key findings from the Layers summative evaluation in the healthcare sector, that are (1) anticipated, actual and potential uses, (2) design patterns, (3) tool-, user- and group-related challenges plus (4) working and learning practices. These findings will be detailed in the following; further details can be found in [5].

Figure 1: Overview of key findings from the summative evaluation in the healthcare sector*

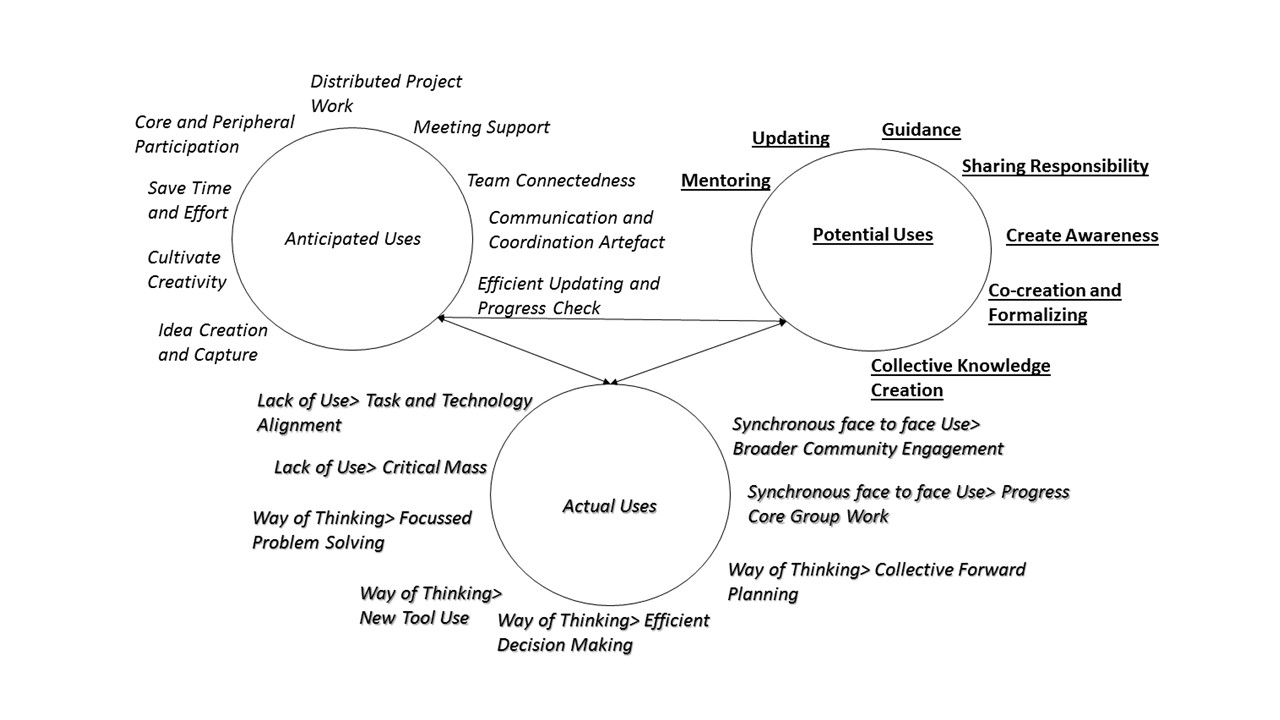

Figure 2 shows the anticipated, potential and actual uses that were identified across the evaluation period. Except from the actual results that emerged by monitoring and analysing the activity of the evaluation participants, the Healthcare professionals identified anticipated uses that could support informal learning at the workplace right at the beginning of the evaluation period as well as potential uses while getting more familiar with the environment of the Layers tools.

Figure 2: Anticipated (italics), potential (underlined, bold) and actual (bold, italics) uses of the Layers tools

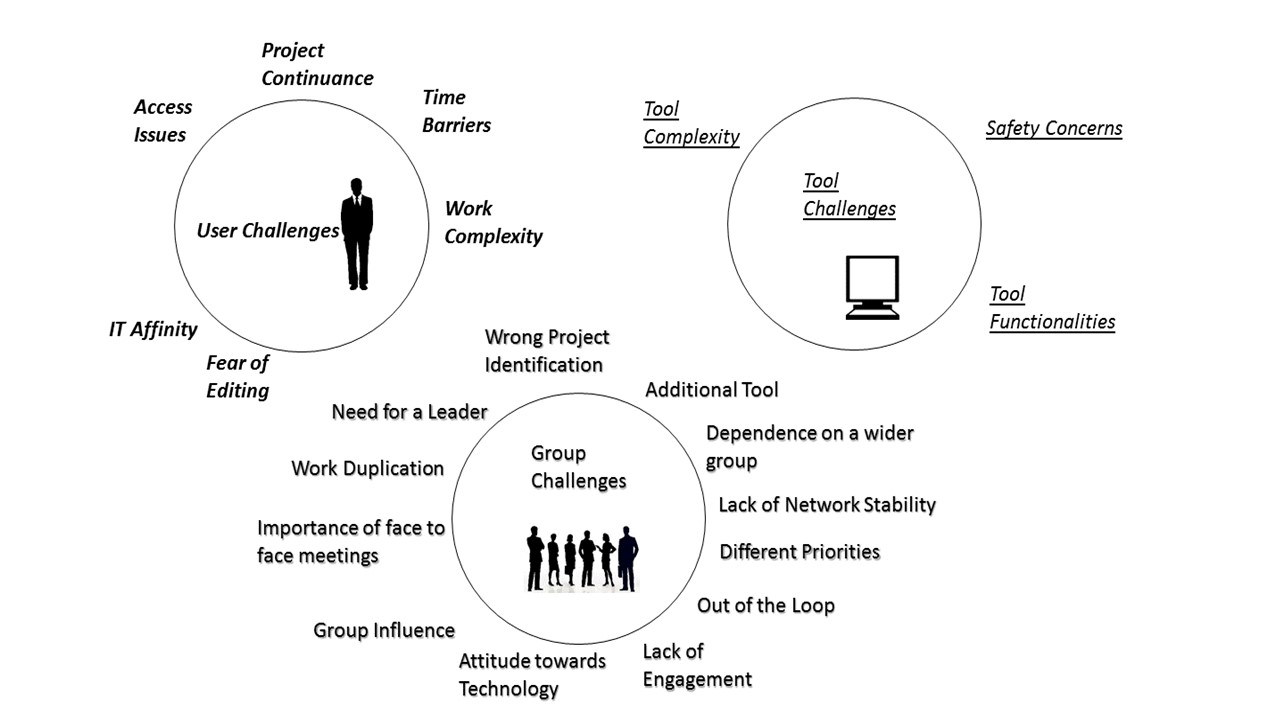

Figure 3 summarizes the challenges that participants perceived in relation with the systematic usage of the Layers tools. As illustrated, the challenges are categorized in tool, user and group level. Such categorization reveals that the healthcare professionals do not only need to overcome challenges related to the Layers tools, but also challenges related to their own individual barriers as well as challenges related to the wider group they are working with.

Figure 3: User (italics), group (bold) and tool (underlined) challenges associated with the usage of the Layers tools

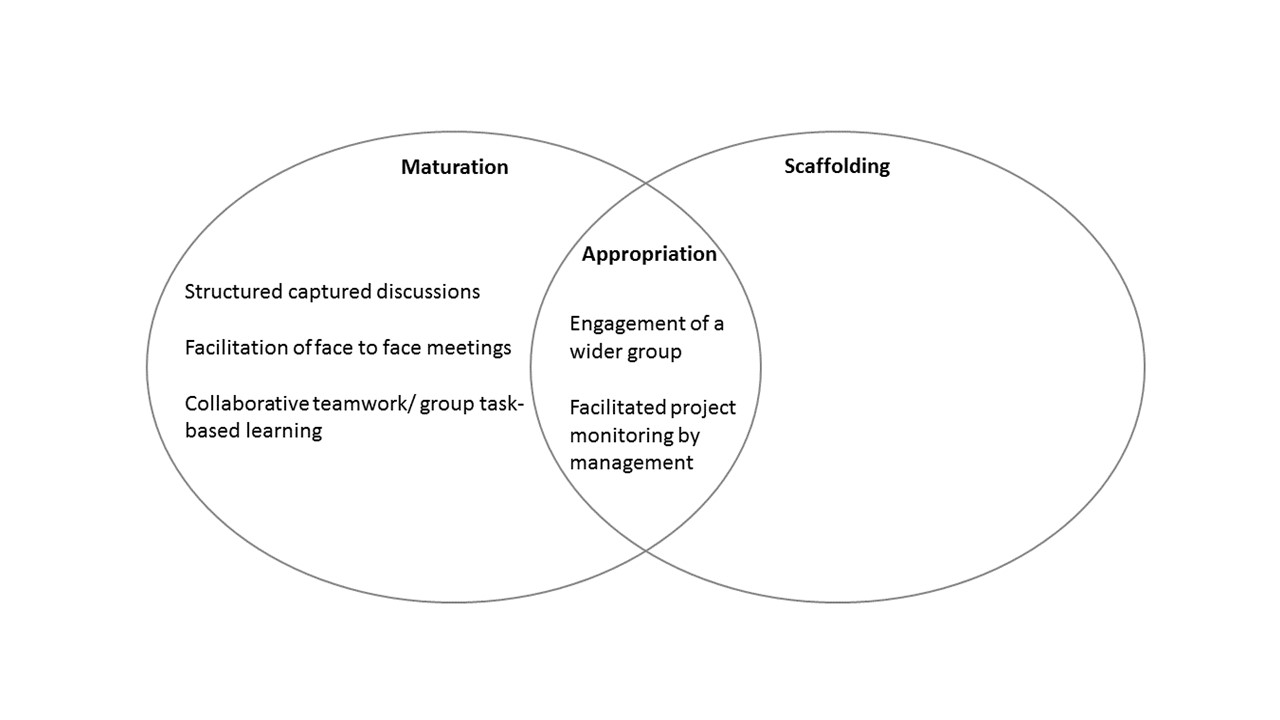

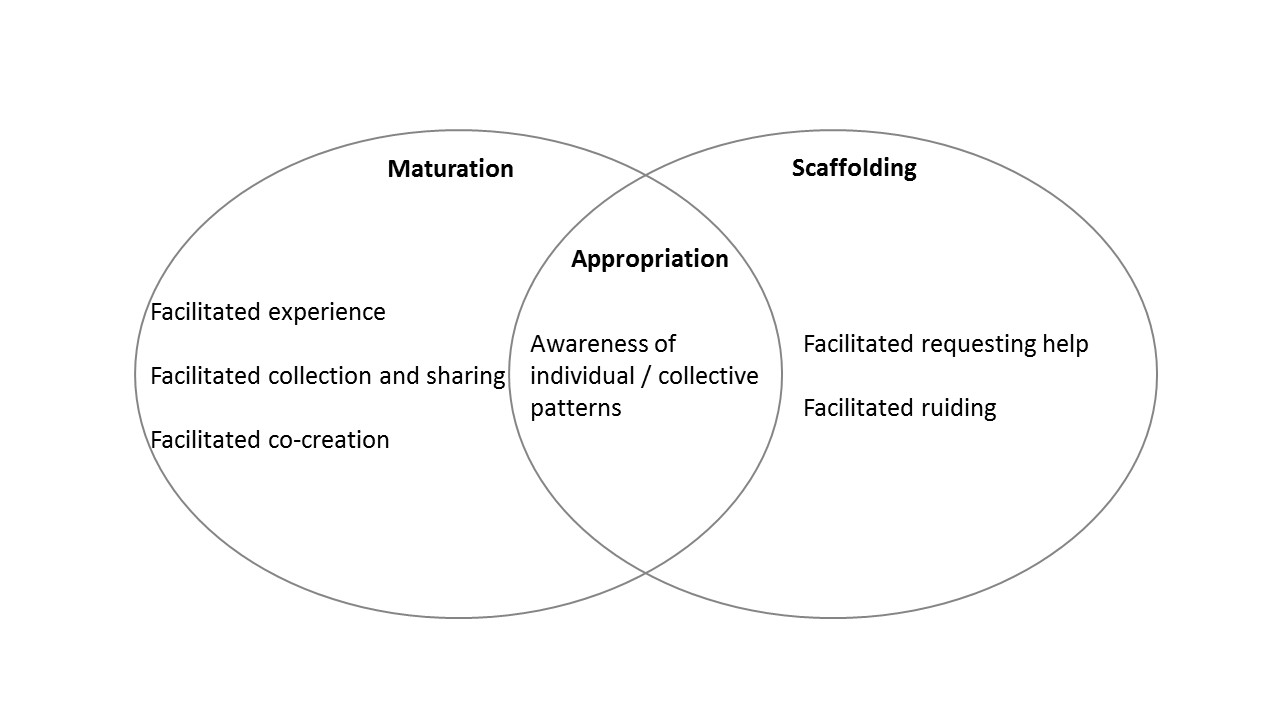

Figure 4 maps the practices we identified and described in the summative evaluation of the healthcare sector to the three knowledge management practices maturation, scaffolding and appropriation which have also been summarized in Knowledge Appropriation in Informal Workplace Learning. For the healthcare sector, the identified practices could all be related to the two knowledge management practices namely maturation and appropriation, while there have not been any substantial changes found with respect to scaffolding practices.

Figure 4: Mapping of changed practices associated with Layers in the healthcare sector to the model of knowledge appropriation

Construction Evaluation Context and Procedure

The evaluation report on the construction sector presents sample, procedure, methods and findings of the summative evaluation in the construction pilots in year 4 (see [6]). The evaluation approach in the construction sector involved approximately 100 construction professionals. The professionals adopted and used the Layers tools in real working and learning settings over a period of six months.

Construction Findings

The findings cover three core perspectives on the evaluation data: (a) challenges and barriers for scaling informal learning, (b) changes of learning and working practices associated with Layers and (c) enabled scenarios. According to (a), we report two perspectives on challenges and barriers during evaluation time frame: actual barriers for tool use during evaluation timeframe including usability aspects, and challenges that can be overcome through tool use. According to (b), we report evidence on changes to learning and working practices associated with Layers tool and concepts (3.2). According to (b), we describe scenarios enabled by Layers (3.3) that are an additional lens on the evaluation data going beyond the changes in learning and working practices. These scenarios employ educational and organizational perspectives to reflect changes by Layers associated with real work settings in the construction sector.

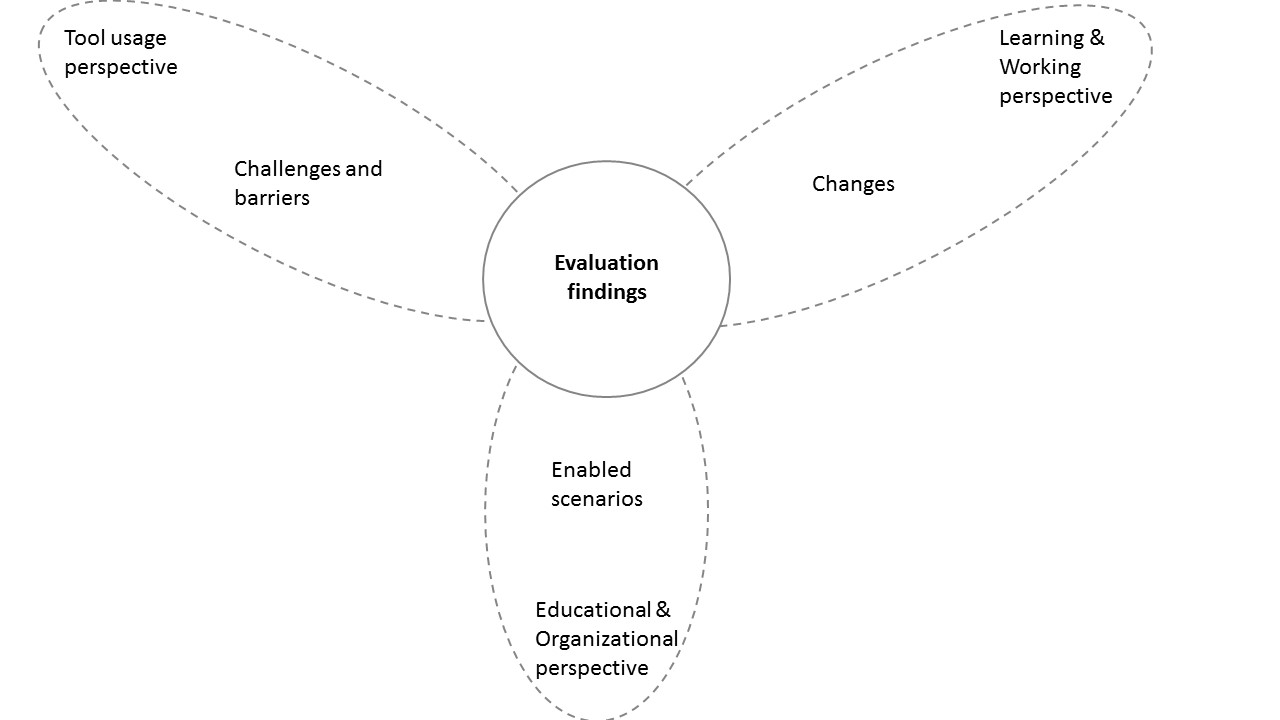

Figure 5 summarizes the key findings from the Layers summative evaluation in the construction sector, that are challenges and barriers from the perspective of tool usage, changes of learning and working practices as well as a scenarios enabled by Layers which were analyzed from an educational and organizational perspective.These findings will be detailed in the following; further details can be found in the Construction Evaluation Report [6].

Figure 5: Overview of key findings from the summative evaluation in the construction sector

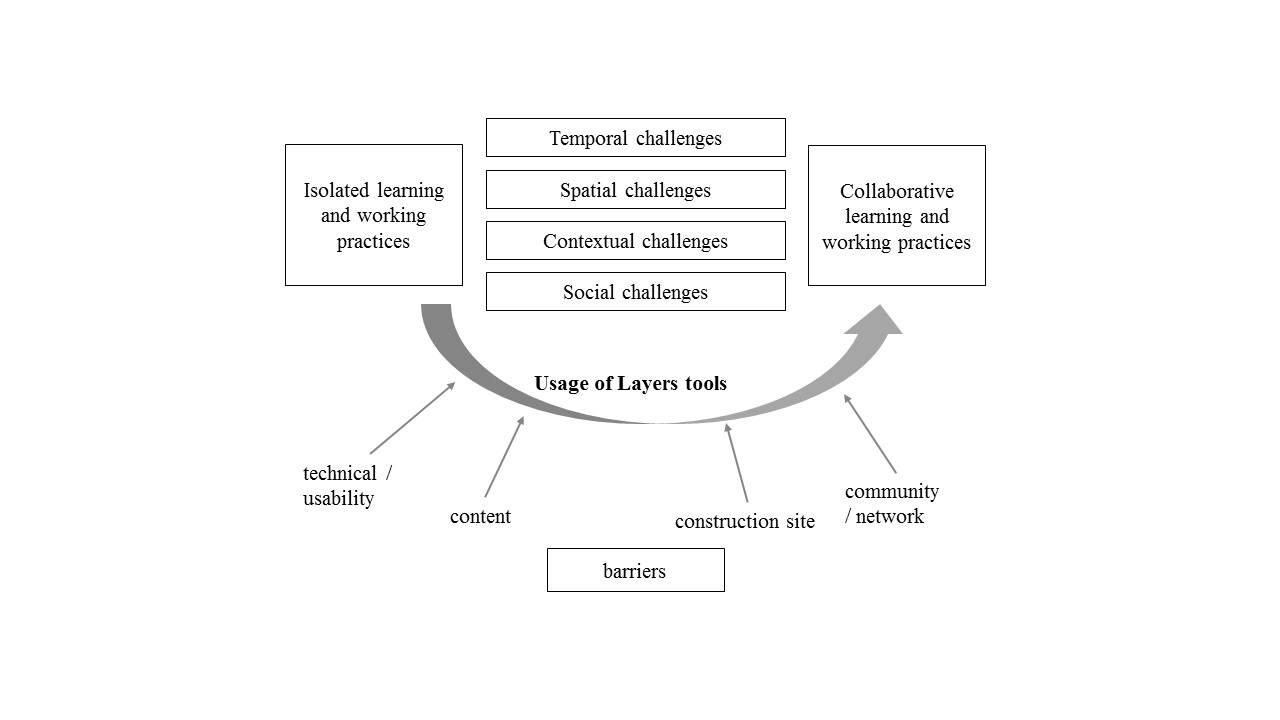

Figure 6 shows a general pattern of digital transformation identified that Layers tools were associated with a switch from isolated to collaborative learning and working practices, thus overcoming four types of challenges, namely temporal, spatial, contextual and social challenges. Usage of Layers tools themselves were associated with four types of barriers, namely technical and usability-related, content-related, construction site-related and community or network-related barriers.

Figure 6: Barriers and challenges associated with the usage of Layers tools

Figure 7 maps the practices we identified and described in the summative evaluation of the construction sector to the three knowledge management practices maturation, scaffolding and appropriation which have also been summarized in Knowledge Appropriation in Informal Workplace Learning.

Figure 7: Mapping of changed practices associated with Layers in the construction sector to the model of knowledge appropriation

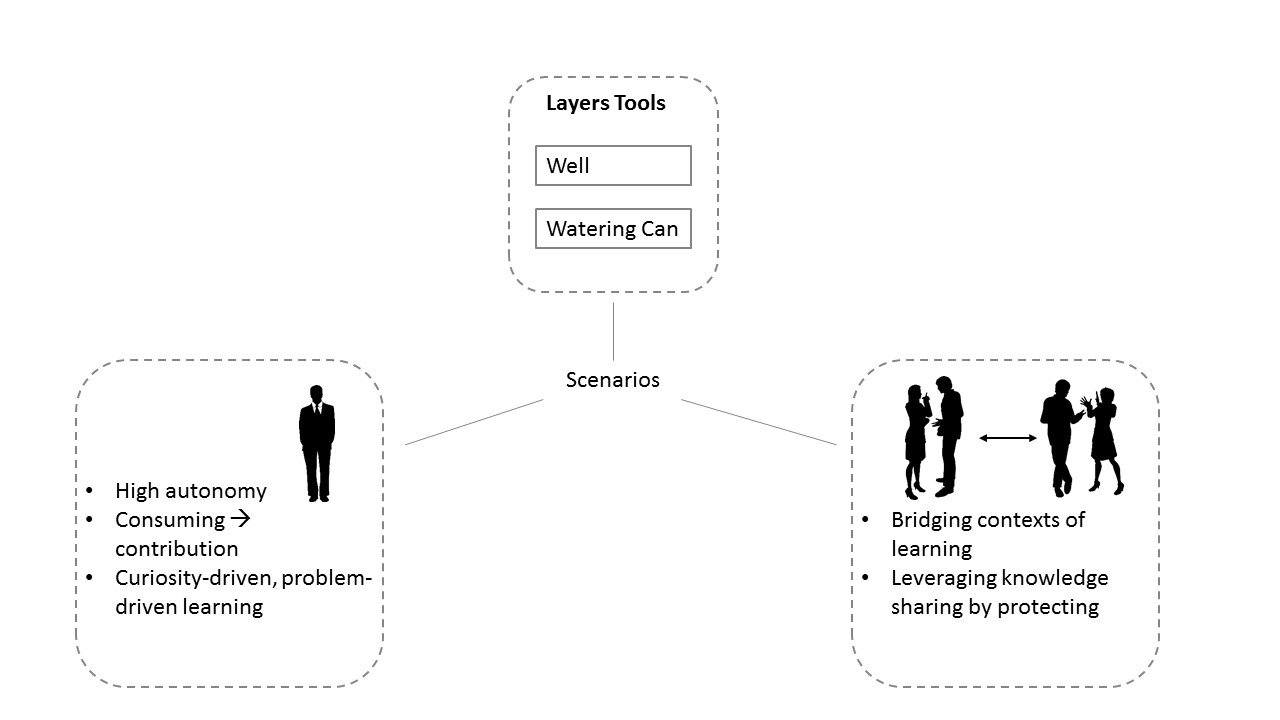

Figure 8 illustrates the six scenarios which are enabled by Layers concerning (1) the way of usage of Layers tools as a well or as a watering can (see also Action-Oriented Learning in Apprentice Training]), (2) the changes in individual learning and working concerning autonomy, style of interaction and learning (see also Changing and Sustaining Practices in Healthcare and Construction) as well as (3) the organization- and network-level facilitation of crossing boundaries (see also [link: Action-Oriented Learning in Apprentice Training and Pilot Testing the AchSo Tool with Bau-ABC Apprentices, and leveraging knowledge sharing by protecting associated with Layers (see also Knowledge Sharing and Protection in Networks of Organizations).

Figure 8: Scenarios enabled by Layers

Conclusion

Following the agile, participatory co-design process extensively employed in the Layers project, also the Layers summative evaluation was a collaborative, open, complex, yet all in all rewarding effort. We developed a stakeholder-driven evaluation approach that comprised several complementary activities of data collection and data analysis as well as joint interpretation of the data involving two communities of stakeholders both for the healthcare as well as the construction evaluation settings.

The findings are rich, particularly with respect to changes in learning and working practices associated with Layers, but also concerning barriers and challenges that need to be overcome to make scaling informal learning happen in networks of organizations. The urgent need for such solutions had become as obvious as the digital transformation that took place in all participating networks of organizations during the Layers project lifetime in general and the evaluation in particular. The substantial changes in working and learning practices that participants reflected upon in focus groups, interviews and a survey are complemented by the results of an analysis of the log files. The actual usage for real work and learning was particularly pronounced for the LTB and, to a lesser extent, for the other Layers tools. However, the manifold findings summarized in this article and presented in detail in the referenced linked documents contribute to our understanding of the challenges and changes in practices associated with such transformations.

Contributing Authors

Ronald Maier, Stefan Thalmann, Markus Manhart, Christina Sarigianni, Manfred Geiger, Janna Thiele, Victoria Banken

Further Reading

Thalmann, S., Ley, T., Maier, R., Treasure-Jones, T., Sargianni, C., & Manhart, M. (2018). Evaluation at Scale: An Approach to Evaluate Technology for Informal Workplace Learning Across Contexts. International Journal of Technology Enhanced Learning. [4]

Learning Layers Healthcare Evaluation Report [5]

Learning Layers Construction Evaluation Report [6]

References

- S. Gopalakrishnan, H. Preskill, and S. J. Lu, “Next generation evaluation: Embracing complexity, connectivity, and change,” FSG. Retrieved September, vol. 30, p. 2013, 2013.

- I. Guijt and others, “Participatory Approaches: Methodological Briefs-Impact Evaluation No. 5,” 2014.

- D. Campilan, “Participatory evaluation of participatory research,” 2000.

- S. Thalmann, T. Ley, R. Maier, T. Treasure-Jones, C. Sargianni, and M. Manhart, “Evaluation at Scale: An Approach to Evaluate Technology for Informal Workplace Learning Across Contexts,” International Journal of Technology Enhanced Learning, vol. 10, 2018. DOI: 10.1504/IJTEL.2018.10013211

- R. Dewey, M. Geiger, M. Kerr, R. Maier, M. Manhart, P. Santos Rodriguez, C. Sarigianni, and T. Treasure-Jones, “Report of Summative Evaluation in the Healthcare Pilots,” pp. under review, 2016 [Online]. Available at: Link

- V. Banken, M. Geiger, R. Maier, M. Manhart, C. Sarigianni, S. Thalmann, and J. Thiele, “Report of Summative Evaluation in the Construction Pilots,” pp. under review, 2016 [Online]. Available at: Link